It’s deployment day! But do you know your AWS resource limits?

By Lauren Rodgers on January 8, 2020In the engineering world, talk of deployment on a Friday afternoon is a major rookie move – the last thing anyone needs is a weekend of rolling back updates and trying to debug someone else's code!

However, no matter what the time of day or day of the week you choose to roll out new changes, a part of you can’t help but worry that something hasn’t been accounted for. In this blog, I’ll discuss one of the newer features at Peak that enables our deployments to run just that little bit more smoothly, by monitoring our AWS resource limits.

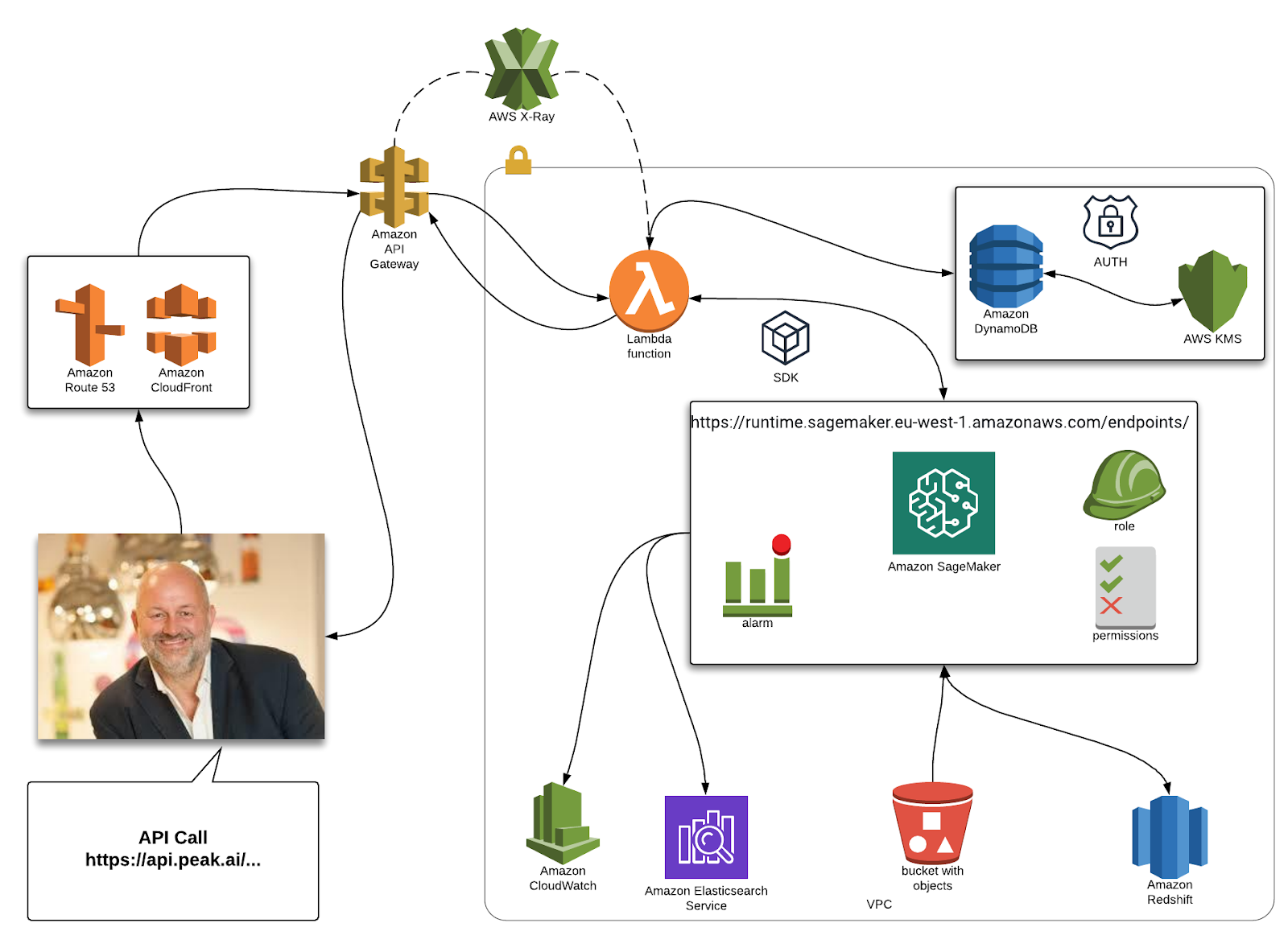

At Peak, the majority of our cloud architecture is hosted by Amazon Web Services (AWS), thus one of the areas we have to mindful about is being continuously aware of our resource usage. As Peak’s customer base continues to grow, additional services and resources are being utilized, and keeping track of your account’s status when multiple AWS resources are being used simultaneously can be a challenge. Thankfully, help is at hand, and AWS provides a number of services to help alleviate these issues around monitoring your AWS resource limits.

AWS Services

Every AWS account that is created is given a list of default service limits for all resources they provide. The default values vary depending upon the region the resources are going to be created in. These limits are in place to ensure AWS can fulfill its guarantee to supply on-demand, readily-available resources for all account holders. After all, it would be unfair if one account holder in eu-west-1 region ruled them all. Some limits (also referred to as quotas) can be increased by making a request via the AWS Support Center. This may sound daunting considering the long, long list of services AWS provides, but don’t panic – they’ve kindly provided us with some tools to help! Let’s discuss some of them…

AWS Service Quotas Console

The Service Quotas tool allows you to monitor and manage AWS resource limits for your account, from one centralized location. This tool also supplies a full list of AWS services and default quota values for your account. If you wish to investigate your own limits, or discover which resources you can adjust, I recommend visiting the Service Quotas Console and selecting the AWS Services option from the list. To illustrate this, the image below show the console view for some of the default quotas on our account for the CloudFormation services. I didn’t choose CloudFormation for any other reason than it being one of my favorite services, but I digress! You may notice that the three quotas identified below cannot be adjusted – so tough luck if you had planned on declaring more than 100 mappings in your yaml/json file. If a value is populated in the Applied quote value column, then this just implies that you have previously requested an increase for this service and the value will automatically override the default value.

This is great for examining your account’s service quotas and requesting increases – but how do we know what the account is currently using and when do we need to make these increases?

AWS Trusted Advisor

Along comes the Trusted Advisor (TA) tool, another AWS service with the goal of providing help by monitoring AWS resource limits and usage. TA is a customized cloud expert which helps companies optimize their AWS resources. For the remainder of this section, let’s focus on the sub-service of Service Limits, but for illustrative purposes the image below shows the landing page dashboard in the AWS console, along with other services provided by TA.

Part of the Service Limits feature is the ability to run ad hoc checks across your services on a per region basis. Once the Service Limits category is selected, the checks commence and will scan the majority of popular services. Upon completion, services will be appointed a status – green for no problems detected, yellow for resources at 80% or more of the account limit, and a red alert when the resource is at 100% of the account limit. If you wish to increase the limit for a particular service, you can go directly to AWS Support Center and create a case, or use the links provided within TA.

Limitations

Although AWS does provide these fantastic tools and offers support for keeping track of your AWS resource limits, these services do come with some minor caveats; for instance, not all services are present in the Service Quotas console and TA tends to only cover the most popular services.

How does Peak monitor this?

To tackle some of the aforementioned caveats with TA and Service Quotas, Peak has created an automated report which alerts our DevOps engineers on Slack once the report is ready to view. This promotes a more autonomous approach and doesn’t rely on any particular colleagues to manually monitor our resource usages.

The process includes multiple resources, but here is a high level overview on the architecture and what it entails. The process is initiated by a CloudWatch rule which acts as a scheduler, so each week the rule will trigger the Task Definition to run. The Task Definition uses a Docker image from the ECR repository, allowing us to update the image whenever is required. The Docker image contains a Python script which utilises the awslimitchecker and constructs the report disclosing the findings. The report is stored in a S3 bucket which triggers a Lambda function to utilize SNS and send an alert to Slack via a webhook. Enclosed below is a pretty diagram of this to illustrate how all the services work together.

The contents of the report have been constructed to highlight the number of checks conducted within each service, and whether they breech 25%, 50%, 80%, or 90% of the account limit thresholds. If any services fall into these thresholds, then the report will disclose exactly which service requires attention. The snapshot below is from an old report but illustrates an example of when one of our services went beyond the 80% threshold for the number of S3 buckets allowed. This allowed our DevOps team take action by making a service limit increase request, or analyze the existing buckets and remove redundancies.

Next steps

At Peak, being autonomous in our work is really important to us, so although the current pipeline for generating reports is a step beyond checking our limits manually, there is always room for improvement! Another feature to implement into this report includes services which fall into the 90% threshold. This would involve an event being triggered to create a service limit increase case ready to be submitted to AWS. Now, obviously we wouldn’t want to increase all of the limits whenever we approach the quotas, so this is where we can introduce some user prompting. An email or message can be sent to the AWS account admin with the option to approve the support case or decline it in order to review existing resources.

Furthermore, when we go to deploy new releases in the future, we want to be able to calculate what extra resources will be required, and if this would breach any of our existing limits. From my previous experience of trying to figure out why something has failed due to reaching limits is by no means an easy process to debug.

This brings my blog to an end – thanks for taking the time to read my first ever piece, have a great day, and happy coding!

Access key rotation: Converting a PoC to production-ready code